Key takeaways:

- AI counts tokens, not words, so it can’t hit exact word counts.

- Most AI models have hidden limits that keep answers short.

- Breaking content into chunks is the only real way to get long output.

AI is everywhere now, but if you’ve ever tried to get a 2,000-word article out of ChatGPT or Gemini, you know it just won’t happen. You might get 700, maybe 1,000 words, but never what you asked for. I’ve been there, and it’s frustrating.

So, why does AI always cut you short? I’m breaking down the real reasons behind these limits, and how you can work around them if you need that long-form content. Stick around, because I’ll show you what’s possible—and what’s not—with today’s AI.

Why AI Always Stops Short: The Token Problem Explained

AI doesn’t count words like we do. It counts tokens. A token is just a chunk of text—sometimes a word, sometimes just a few letters. For example, “Darkness” could be two tokens: “dark” and “ness.” So when you ask for 2,000 words, the model is really thinking in tokens, not words.

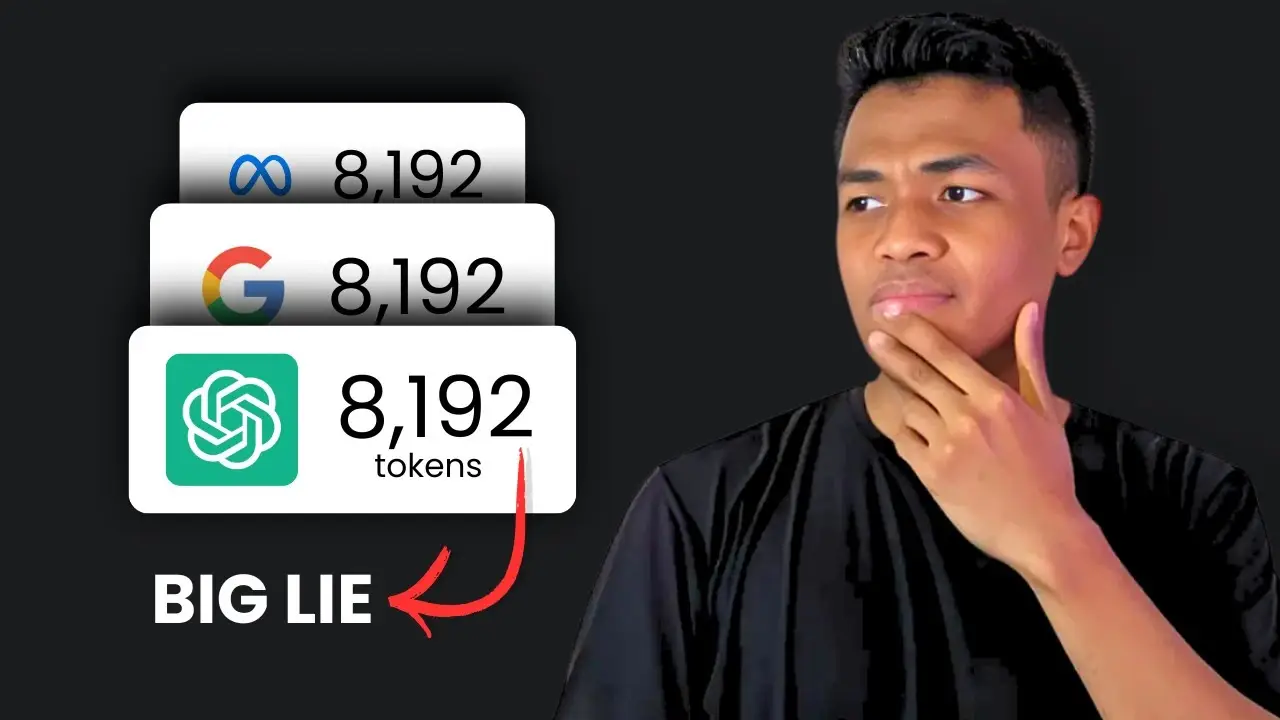

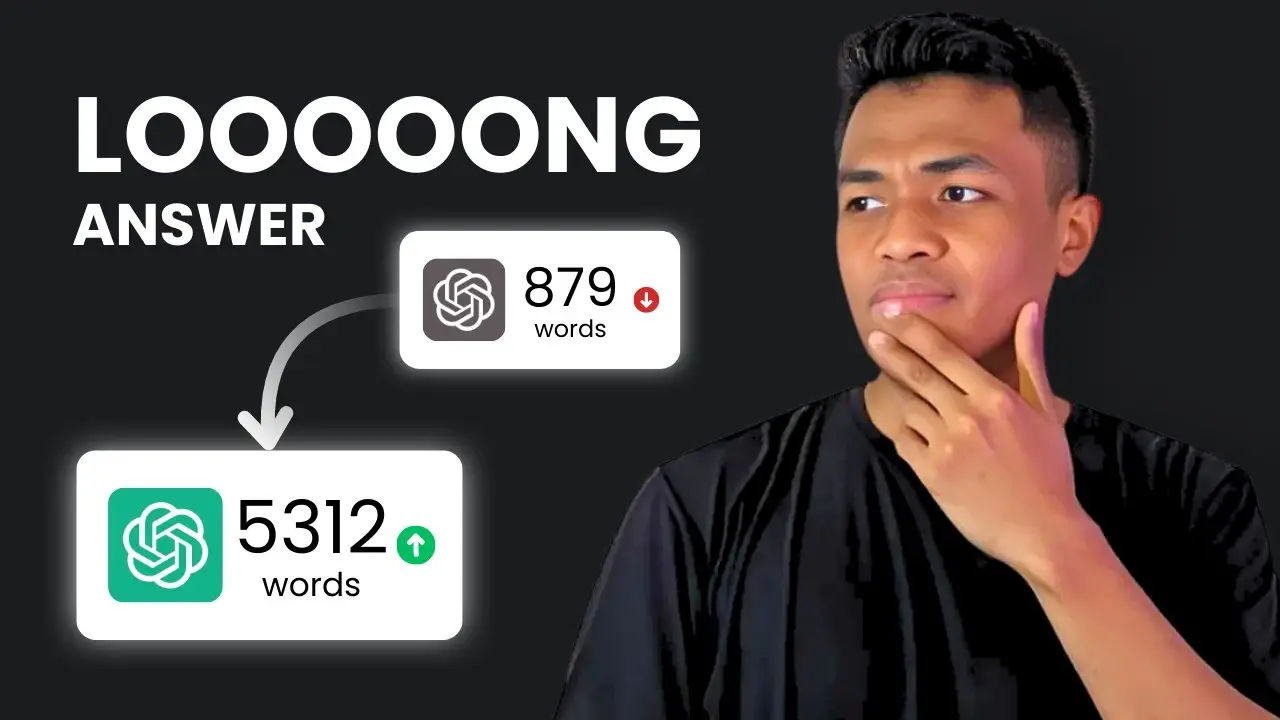

Most big models like ChatGPT or Gemini have a token limit per response. For GPT-4, that’s about 8,000 tokens, which should be 5,000–6,000 words in theory. But in practice, you rarely get more than 1,000 words in one go. Why? Because the model uses tokens for both your prompt and its answer. If your prompt is long, there’s even less room for the response.

The Soft Limit: Why AI Models Keep It Short on Purpose

Even if the model says it can output 8,000 tokens, you almost never get that much. AI companies put in “soft limits” behind the scenes. These are invisible rules that make the AI give shorter, more concise answers, even when you ask for more.

Why do they do this? It’s all about saving money and keeping things running smoothly. Longer responses use more computing power, which costs more. So, they quietly nudge the AI to keep things brief. Sometimes, system prompts inside the model tell it to be concise, no matter what you ask.

System Prompts: The Invisible Hand Guiding AI Responses

Every AI model has hidden instructions—system prompts—that guide how it answers. These can tell the AI to be short, avoid certain topics, or stick to a specific style. You never see these prompts, but they’re always there, shaping what the AI spits out.

Some companies, like Anthropic with their Claude model, actually publish their system prompts. You’ll see lines telling the AI to “keep answers short and clear.” That’s why, even if you ask for 5,000 words, you’ll get a summary or a much shorter version.

Why Token Limits Make Long-Form Content So Hard

Let’s say you want a 2,000-word blog post. You give the AI a detailed prompt, but it stops at 800 words. You try again—same result. The reason is simple: the model hits its token limit and just stops, sometimes mid-sentence.

Here’s a quick table showing common AI token limits and what you actually get:

| Model | Max Output Tokens | Typical Max Words | Real-World Output |

|---|---|---|---|

| GPT-3 | 4,096 | ~3,000 | 500–700 |

| GPT-4 | 8,192 | ~6,000 | 700–1,000 |

| Gemini 1.5 | 8,192 | ~6,000 | 700–1,000 |

| Claude 3.7 Sonet | 128,000 | ~96,000 | 1,500–1,700 |

Real-world output is what you’ll probably get in one response, not the theoretical max.

Chopping It Up: The Only Real Workaround

If you’re desperate for long content, you have to break it into pieces. Ask the AI for the first half, then the second half, and so on. It’s not pretty, but it works. You can also try feeding the AI its own previous output to keep things going, but it often loses track and gets repetitive or inconsistent.

Some people use recursive summarizing—breaking the content into chunks, summarizing each, then joining them. But this usually kills the flow and makes the writing feel choppy.

Coherence Falls Apart Over Long Content

Even if you somehow get 2,000 words out of an AI, the quality drops fast. The model forgets what it wrote earlier, repeats itself, or loses the thread of the story. You’ll see plot holes, mixed-up names, or just bland, repetitive writing.

AI is great for short stuff—blog posts, emails, maybe a short story. But for anything long, you’ll spend more time fixing mistakes than if you wrote it yourself.

Bigger Models, Bigger Limits—But Still Not Enough

Some newer models like Claude 3.7 Sonet have much bigger token limits—up to 128,000 tokens. That sounds huge, but in real life, you still can’t get a 10,000-word article in one shot. You might get 1,700 words, which is better, but still not what’s advertised.

Even when you pay for premium access, most platforms keep these limits in place. Sometimes, third-party tools let you push the boundaries, but it’s hit or miss.

Why AI Companies Do This: Money and Safety

Longer answers cost more to generate. Every word uses up server time and electricity. Plus, longer outputs are harder to monitor for safety, so companies keep things short to avoid trouble. It’s not just about tech—it’s about business and trust, too.

What Actually Works: My Go-To Tips for Longer AI Content

- Ask for more words than you need. If you want 2,000, ask for 3,000 or 4,000.

- Break your request into sections: intro, body, conclusion.

- Use follow-up prompts to stitch content together.

- Try models with bigger token limits, like Claude 3.7 Sonet, if you can.

- Don’t expect perfect coherence in long content—always review and edit.

FAQs

Why can’t AI just count words and give me what I ask for?

AI counts tokens, not words. Tokens can be shorter or longer than words, so it never matches up exactly.

What’s the real limit for AI-generated content length?

Most models cap out at about 700–1,000 words per response, even if the theoretical limit is higher.

Can I get around the limit by paying for premium?

Usually, no. Even paid versions have hidden limits to control costs and keep things running smoothly.

Why does AI lose track in long content?

AI can’t remember what it wrote earlier in the same way a human does, so it repeats itself or forgets details over long stretches.

Is there any AI that can write a whole book in one go?

Not yet. Even the biggest models can’t handle a full book or long essay in a single prompt. You have to break it up and piece it together yourself.

That’s the real story behind why AI can’t write long content right now. If you want more tips or want to see how I use these models for daily writing, check out the video above. 👆📝